Improved Open Source Backup:

Incorporating inline deduplication and sparse indexing solutions

G. P. E. Keeling

< Prev

Contents

Next >

Appendix D - Design of the first iteration

D.1. Storage format

Each client will have its own client storage directory on the server. This

will contain a separate directory for each backup made, plus a single directory

containing all the signature and data files.

The signature and data file format will be the same as in the proof of concept

programs (see Appendix B).

Each individual backup directory will contain a 'manifest' file, which are the

equivalents of the manifest files in the prototype. They contain the

instructions on how to reconstruct files from the data.

They will contain more information than in the prototype.

For example, they will contain lines representing various file system entry

types, such as directories, hard links, soft links, and so on.

Preceding each of these will be a line containing meta data for individual file

system entry being backed up (time stamps, permissions, etc). This allows for

the very significant cost saving measure of deciding whether to back up data

in a file name that has been saved before, based on modification time.

The following is an example of a section of a manifest file.

'r' lines are base64-encoded meta information for the following entry.

'd' is a directory

'f' is a regular file

'S' is a reference to a location in a signature/data file.

'l' is a soft link, which come in pairs. The first line is the location of the

file system entry. The second line is the target of the soft link.

Of course, there will be more file system entry types than this.

r003CA gB QAAF EH9 C Po Po A BAA BAA I BSB5nr BR8cCx BR8cCx A A J

d005D/home/graham/burp-cross-tools/mingw-w64-i686/libexec/gcc/i686-w64-

mingw32/4.7.3/install-tools

r003Cn7 gB QBYV IHt B Po Po A x8 BAA I BSByPY BR8cCw BR8cCw A A J

f0067/home/graham/burp-cross-tools/mingw-w64-i686/libexec/gcc/i686-w64-

mingw32/4.7.3/install-tools/mkheaders

S00130000/0000/000B/0C4F

r003Cn8 gB QBYg IHt B Po Po A 3S BAA I BSByPY BR8cCx BR8cCx A A J

f006B/home/graham/burp-cross-tools/mingw-w64-i686/libexec/gcc/i686-w64-

mingw32/4.7.3/install-tools/mkinstalldirs

S00130000/0000/000B/0C50

r003AA gB QBYY KH/ B Po Po A W BAA A BSByNP BR8cCw BR8cCw A A J

l0060/home/graham/burp-cross-tools/mingw-w64-i686/libexec/gcc/i686-w64-

mingw32/4.7.3/liblto_plugin.so

l0016liblto_plugin.so.0.0.0

It should be possible to process manifest files quickly, for various purposes,

such as listing all the files in a directory, merging two manifests together,

or finding the differences between two manifests. To facilitate this, the

file system entries in the manifest will be ordered alphabetically (with case

sensitive 'strcmp()' at each path component). The following simple list

demonstrates that ordering:

/

/abc/def/ghi

/abc/def/ghi/aaa

/abc/def/ghi/mmm/nnn/ooo

/abc/def/ghi/zzz

/abc/def/zzz

/abc/dff/zzz

/zzz

This means that, when given a pair of paths, you will know if one is 'less

than', 'equal to', or 'greater than' another path. The comparison, therefore

of two manifests, will always be linear. That is, it is only necessary to make

forward progress through any manifest.

A consequence of this is that, during backup, the initial client scan of the

meta data for the file system entries to be backed up should also proceed in

the same order.

D.2. Network data streams for backup

For this project, this will be changed. In order to maximise throughput, the

client will send the scan information, and as soon as the server starts

receiving it, it will start requesting data from the files that it needs.

The client will then open those files, compute the signatures of the blocks

that make up the contents of those files, and send the signatures to the

server.

As the server receives the signatures, it will attempt to find them in the

existing data store. If it fails, it requests the blocks it needs from the

client, which will then send them. The server will save the blocks and note

in the manifest the locations of the blocks that are needed to reproduce the

file.

So, there will be five main components to the network communications in a

backup. Three from client to server, and two from server to client.

Client to server stream 1 - file scan data.

This will generally be 'r' (meta data), followed by '<entry type>' (file

system entry type') with its path. Repeat until scan finished.

r...d...r...f...r...f...

(this is a representation of how each

component of the stream will look, showing only

the leading byte)

Server to client stream 1 - file requests.

This will be '<entry type>' with its path. Repeat until no more files

need to be requested.

The server keeps track of the files that it requested. It knows that the

next set of signatures from the client will be for the next file in the

list of requested files.

f...f...f...f...f...f...

Client to server stream 2 - signatures.

This will be 'R' (meta data), followed by one or more 'S' (signature) entries.

The meta data contains an index number unique to each signature/block in this

backup.

Repeat until no more signatures need to be sent.

R...S...S...R...S...S...

Server to client stream 2 - data requests.

This will be 'D' (data request), which contains the index number for the block

required.

Repeat until no more data needs to be requested.

D...D...D...D...

Client to server stream 3 - data.

This will be 'B' (block data), which contains the actual data requested.

Repeat until no more data needs to be sent.

B...B...B...B...

Each of these streams will be multiplexed over the same TCP connection, and

demultiplexed again on the other side. The TCP connection should never be left

waiting with no traffic passing over it,

in order to maximise throughput (simultaneously in both directions) and

minimise time spent.

Streams later in the above sequence have priority over those earlier in the

sequence.

So, for example, while the client has an outstanding signature or block to

send, it will not be sending scan information, (although it could still be

gathering scan information from disk).

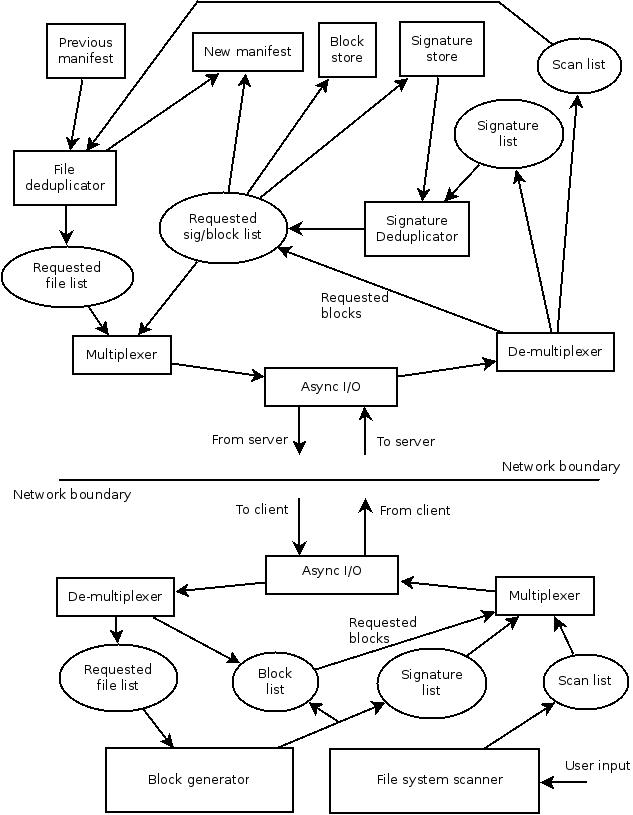

I have produced a high level diagram of the intended backup data flow around the

client and server, showing the major components and their inputs and outputs.

D.3. Scanning the client file system

In the original burp, the client would perform an initial scan of the files to

be backed up, sending the meta data to the server. Then based on the scan, once

finished, and the contents of the previous manifest, it would tell the client

which files that it needed the contents of and the client would then send the

data.

Due to the multiplexing nature of the new system, the file scan component needs

to be reworked. Instead of doing the whole scan from beginning to end

and then returning (after having sent all the data across the network), there

needs to be an interface that, when called, returns

the next file system entry in the scan. The calling routine can then decide

what to do with the data, or keep it for use later.

D.4. Pseudo code for main client backup process

while backup not finished {

// multiplexer

if nothing in write buffer {

try to fill write buffer from requested block list

if nothing in write buffer {

try to fill write buffer from signature list

if nothing in write buffer {

try to fill write buffer from scan list

}

}

}

run async i/o (this will attempt to empty the write buffer into

the async i/o buffer, as well as attempting to empty some of

the async i/o buffer onto the network, and attempting to fill

the read buffer with any incoming data)

if something in read buffer {

// demultiplexer

a) if incoming file request, add to requested file list, or

b) if incoming block request, mark block as requested

}

try to add to scan list by scanning the file system

try to generate more signatures and blocks from requested files

by opening the requested files and reading them

}

D.5. Pseudo code for main server backup process

while backup not finished

{

// multiplexer

if nothing in write buffer

{

try to fill write buffer from signatures from client

if nothing in write buffer

{

try to fill write buffer from scan list from

client

}

}

run async i/o (this will attempt to empty the write buffer

into the async i/o buffer, as well as attempting to empty some

of the async i/o buffer onto the network, and attempting to

fill the read buffer with any incoming data)

if something in read buffer

{

// demultiplexer

a) if incoming scan, add to scan list from client,

deduplicating via modification time comparison if

possible

or

b) if incoming signature, add to signature list from

client, deduplicating via signatures from signature

store if possible

or

c) if incoming block, add incoming block and signature

to data store

}

}

D.6. Network data streams for restore

Initially, the data streams for restore will be the same as in the original

burp. That is, the server will send a meta data line, then the type of file

system entry to create, and then zero or more lines containing the content to

restore.

In time, the new software can do similar deduplication in the reverse

direction. For example, chunks of the data store could be replicated onto the

client, and then instructions for how to use it to reconstruct the original

data could be sent, thereby meaning that each chunk would need to cross the

network once. For now though, I am aiming for reasonable restore times,

so this kind of reverse deduplication is beyond the scope of the project.

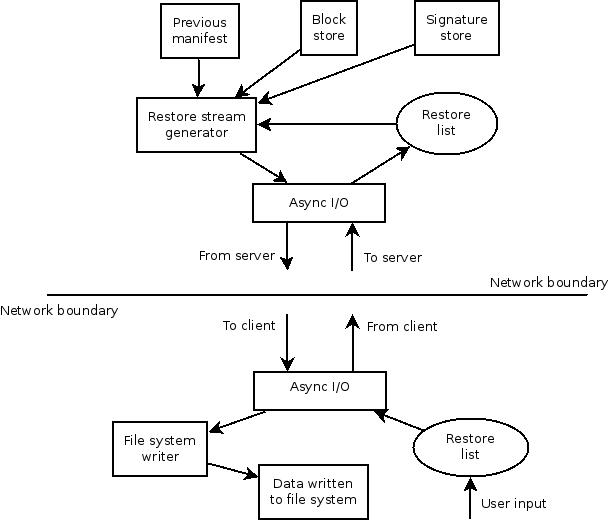

I have produced a high level diagram of the intended restore data flow around

the client and server, showing the major components and their inputs and

outputs.

< Prev

Contents

Next >

|